ChatGPT, an advanced AI language model created by OpenAI, is gaining popularity for its ability to generate human-like responses to natural language input.

28 Feb 2023

What is ChatGPT?

ChatGPT, an advanced AI language model created by OpenAI, is gaining popularity and attention for its ability to generate human-like responses to natural language input. Trained on large amounts of data, ChatGPT's context comprehension and relevant response generation have made it a popular choice for businesses seeking to enhance customer experience and operations.

Major technology corporations are making significant investments in Artificial Intelligence (AI). Microsoft, for instance, has declared that it will invest $10 billion in OpenAI and intends to merge ChatGPT into its Azure OpenAI suite. This will allow businesses to include AI assets, including DALL-E, a program that generates images, and Codex, which transforms natural language into code, in their technology infrastructure.

While ChatGPT has several benefits for financial institutions, such as improving customer service and automating certain tasks, it also carries some risks that need to be addressed. Major banks and other institutions in the US have banned the use of ChatGPT within the organization. Concerns over sensitive information being put into the chatbot.

Risks associated with incorporating ChatGPT

Let's delve into the potential risks that are currently being debated regarding the use of ChatGPT:

- Data Exposure: One potential risk of using ChatGPT in the workplace is the inadvertent exposure of sensitive data. For example, employees using ChatGPT to generate data insights and analyze large amounts of financial data could unknowingly reveal confidential information while conversing with the AI model, which could lead to breaches of privacy or security. Another known data exposure case observed is Employees could potentially expose private code if they inadvertently include confidential information in the training data. This could occur if an employee includes code snippets that contain sensitive data or proprietary information, such as API keys or login credentials.

- Misinformation: ChatGPT can generate inaccurate or biased responses based on its programming and training data. Financial professionals should be cautious while using it to avoid spreading misinformation or relying on unreliable advice. ChatGPT’s current version was only trained on data sets available through 2021. In addition, the tool pulls online data that isn’t always accurate.

- Technology Dependency: While ChatGPT offers useful insights for financial decision-making, relying solely on technology may overlook human judgment and intuition. Financial professionals may misunderstand ChatGPT's recommendations or become over-reliant on it. Thus, maintaining a balance between technology and human expertise is crucial.

- Privacy Concerns: ChatGPT gathers a lot of personal data that users, unassumingly, might provide. Most AI models need a lot of data to be trained and improved, similarly, organizations might have to process a massive amount of data to train ChatGPT. This can pose a significant risk to individuals and organizations if the information is exposed or used maliciously.

External Risks associated with ChatGPT

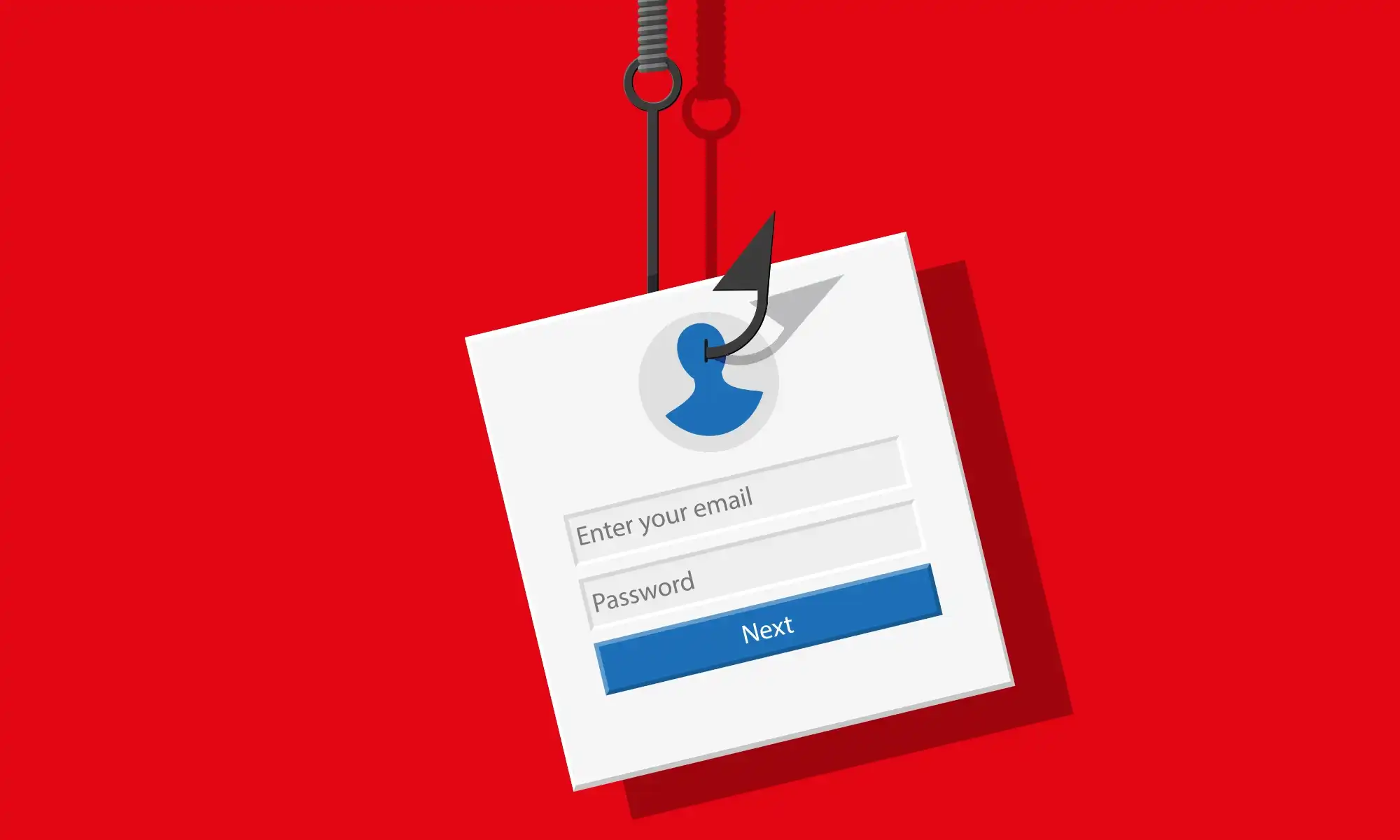

- Social Engineering: Cybercriminals can use ChatGPT to impersonate individuals or organizations and create highly personalized and convincing phishing emails, making it difficult for victims to detect the attack. This can lead to successful phishing attacks and increase the likelihood of individuals falling for the scam.

- Creating malicious scripts and malware: Cybercriminals can train ChatGPT on vast amounts of code to produce undetectable malware strains that can bypass traditional security defenses. By using polymorphic techniques like encryption and obfuscation, this malware can dynamically alter its code and behavior, making it challenging to analyze and identify.

Recommendations:

- Financial institutions should establish clear policies and guidelines for using ChatGPT in the workplace to safeguard confidential information and mitigate the risks of data exposure.

- Anonymized data should be used to train an AI model to protect the privacy of individuals and organizations whose data is being used.

- Specific controls should be applied to how employees use information from ChatGPT in connection with their work.

- Awareness training should be provided to Employees who have access to ChatGPT on the potential risks associated with the use of the technology, including the risks of data exposure, privacy violations, and ethical concerns.

- Restricting access to ChatGPT will limit the potential for data exposure and misuse of the technology.